These steps are the functional steps in achieving the end-goal of a data application. Meet Datomai an enterprise grade cloud data pipeline platform that brings together all your datoms ˈdādomˈdadom smallest piece of information.

Sustainable Ai And The New Data Pipeline Full Stack Feed

Sustainable Ai And The New Data Pipeline Full Stack Feed

Als Data Pipeline wird der automatische Transfer von Daten aus einer Quelle in ein Ziel betitelt.

Ai data pipeline. Data pipelines help achieve this. The tfdata API was built with three major focal. Ob nun die Konsolidierung mehrerer Dateien in einen Report der Transfer von Daten aus einem Quellsystem in ein Data Warehouse oder Event Streaming es gibt eine hohe Bandbreite an Anwendungsfällen um eine Data Pipeline.

By combining data from disparate sources into one common destination data pipelines ensure quick data analysis for insights. We will now see how we can define a Kubeflow Pipeline and run it in Vertex AI using sklearn breast_cancer_dataset. It is an automated.

With AI Platform Pipelines you can set up a Kubeflow Pipelines cluster in 15 minutes so you can quickly get started with ML pipelines. To understand how a data pipeline works think of any pipe that receives something from a source and carries it to a destination. See how information moves through the four stages of the AI data pipeline and where Intel Optane SSDs and 3D NAND SSDs play a critical role.

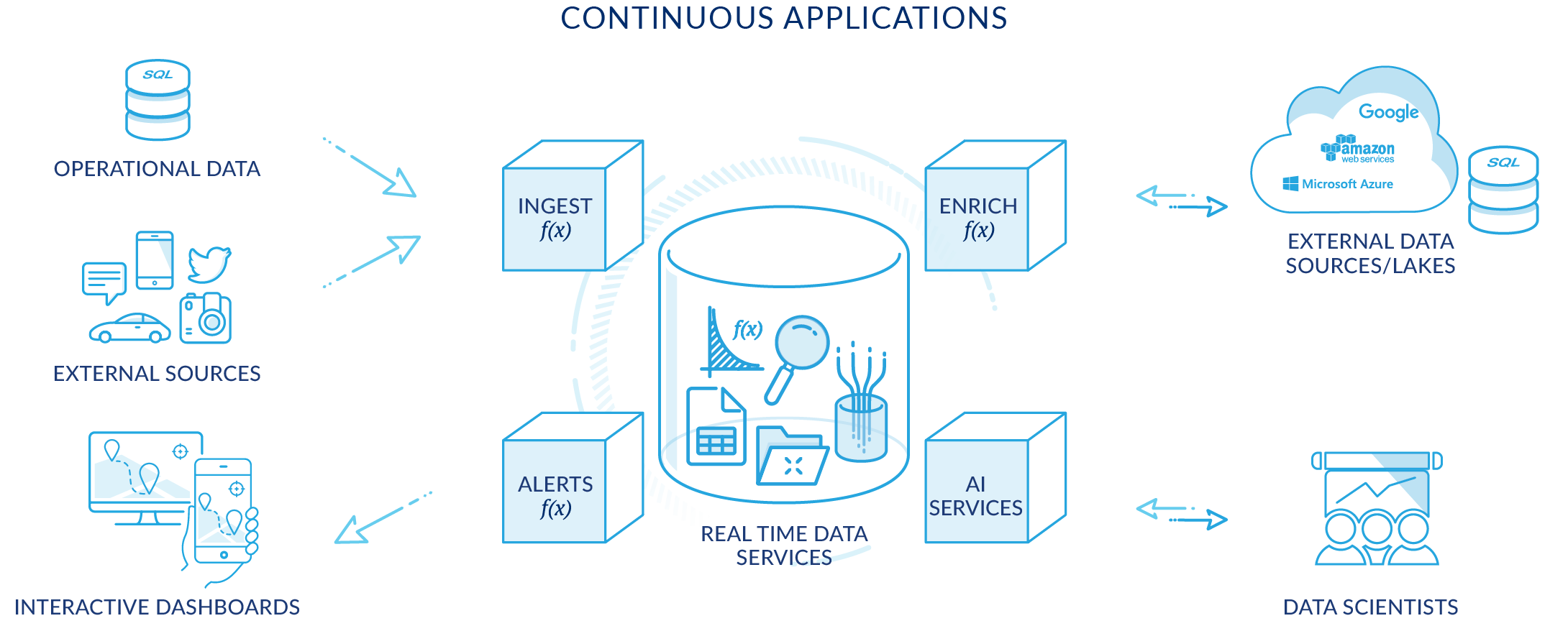

More specifically a data pipeline is an end-to-end process to ingest process prepare transform and enrich structured unstructured and semi-structured data in a governed manner. In software engineering people build pipelines to develop software that is. From preprocessing data so its most valuable to feeding the data to the neural networks for model training NetApp AI solutions for the core remove performance bottlenecks and accelerate AI workloads.

A customized example with a toy dataset. Was ist eine Data Pipeline. For those who dont know it a data pipeline is a set of actions that extract data or directly analytics and visualization from various sources.

We often use the term. Thus creating an automated data pipeline to reduce man made. Thats the simple definition.

Accelerate analysis in the cloud. Die Data Pipeline sorgt dafür dass die Schritte für alle zu übertragenden Daten identisch ablaufen und konsistent sind. We are now defining a Pipeline with 3 simple steps known in KFP as Ingest data and separate train and test splits.

A data pipeline is effectively the architectural system for collecting transporting processing transforming storing retrieving and presenting data. So the focus of this article is to talk about holistic data systems whether theyre MLAI or not as a data pipeline. You can move millions of rows of data in minutes and natively connect to 150 of the most popular internal and external applications databases files and events including Microsoft.

Die Ausprägung einer solchen Pipeline kann viele Arten annehmen. We transfer and load these data to desired destinations and visualize them by implementing unique rules. We extract and unify data from formats such as paper documents GIS BIM CAD PDF.

Datom allows you to bring insight into your organization in an instant. A pipeline is a generalized but very important concept for a Data Scientist. Unternehmen setzen Datenpipelines ein um das Potenzial ihrer Daten möglichst schnell zu entfalten.

Data pipelines automate the movement and transformation of data. Der wachsende Bedarf an Datenpipelines. Building a Modern AI Data Storage Pipeline Modern storage infrastructures need an AI data pipeline that can deliver at every stage.

Data pipelines move data from one source to another so it can be stored used for analytics or combined with other data. A data pipeline provides enterprises access to reliable and well-structured datasets for analytics. Train out model using the train split from step 1.

In this article were going to explore the tfdata API a very powerful tool built into TensorFlow that provides the flexibility needed to build highly-optimized data pipelines. A pipeline also may include filtering and features that provide resiliency against failure. The core is the heart of your AI data pipeline and it demands high IO performance.

AI Platform Pipelines also. A data pipeline is a set of actions that ingest raw data from disparate sources and move the data to a destination for storage and analysis.